A technical guide to distributed aperture radar

Modern vehicles are hotbeds of innovation. Advances in sensor technologies, artificial intelligence algorithms and power-efficient microprocessors make multiple advanced driver assistance systems (ADAS) possible. Many ADAS features demand low-latency across high-bandwidth connections to other ADAS electronic control units (ECUs).

Adaptive cruise control (ACC) interacts with braking and throttle ECUs, taking inputs from radar, LiDAR and camera sensors. These functions need an accurate, reliable and comprehensive view of the road ahead. Sensor fusion algorithms combine individual, disparate sensor data streams to create that holistic view.

Combining different data sources overcomes single sensor limitations. Some ADAS systems now also include microphones to detect audible warnings or unseen hazards, such as the siren of an approaching emergency vehicle. Sensor fusion makes vehicles safer by alerting drivers to hazards they may not have seen or heard. ADAS functions are integral to the operation of semi- and fully autonomous vehicles.

While cameras struggle in low light or in mist, radars see clearly. Each sensor type has its strengths, but we get a more detailed view by combining their data. Sensor fusion improves perception and provides operational robustness through redundancy. There are also cost considerations; for example, LiDAR offers outstanding short-range detail but at a higher cost than radar. Radar subsystems are more affordable but better suited for longer-range detection.

What field of view does ADAS need?

Complete autonomy depends on the progress toward designing safer vehicles. So far, the majority of ADAS systems are forward-looking, with a field of view (FOV) of 90 to 120 degrees. A 360-degree view is available on some vehicles, using multiple cameras, but there are compromises on spatial accuracy and object detection.

For some assistance and automated functions, such as the self-parking vehicle, a 360-degree bird's-eye view is paramount. Vehicle manufacturers are implementing 360-degree all-around detection, but adding multiple radar, LiDAR and camera sensors all around the car represents a significant cost and more space, which impacts the vehicle's aesthetics.

How radar combines optimum sensing with scalability for ADAS

Of all the sensing technologies, radar is the only one that can operate under all environmental conditions, including day, night, rain, mist, snow and more. Since it is less susceptible to performance degradation compared to other sensor methods, it is ideal for use in ADAS applications. Additionally, with its competitive pricing, it offers automotive manufacturers the opportunity to reduce their bill of materials costs.

However, to become the sole sensor technology used in ADAS, radar requires more precise object identification and improved spatial accuracy. Angular resolution is determined by the size and number of antennas. Designing a radar with a larger aperture improves accurate object detection and spatial accuracy, but it comes at the expense of requiring more physical space, particularly for front-facing radars. Large antennas impact the physical appearance of a vehicle and can be a challenge to integrate.

Distributed aperture radar

Distributed aperture radar (DAR) is the approach now gaining traction that could solve the ADAS sensing challenge. Today's forward-facing automotive radars typically use medium-range radar modules (MRR) that feature up to 16 virtual antenna channels to facilitate the angular resolution of objects. Technology innovations already point to the possibility of creating high-resolution radar sensor modules with up to 256 virtual channels, offering significantly improved performance.

These sensors combine cutting-edge antenna and transceiver design, together with sophisticated software algorithms to create a “synthetic” aperture radar (SAR) that emulates an antenna arrangement much larger than its physical dimensions. While this approach yields improved spatial resolution, the required hardware is extensive, it consumes a lot of power, and still occupies a lot of space. These physical constraints are limiting and present a challenge for vehicle integration.

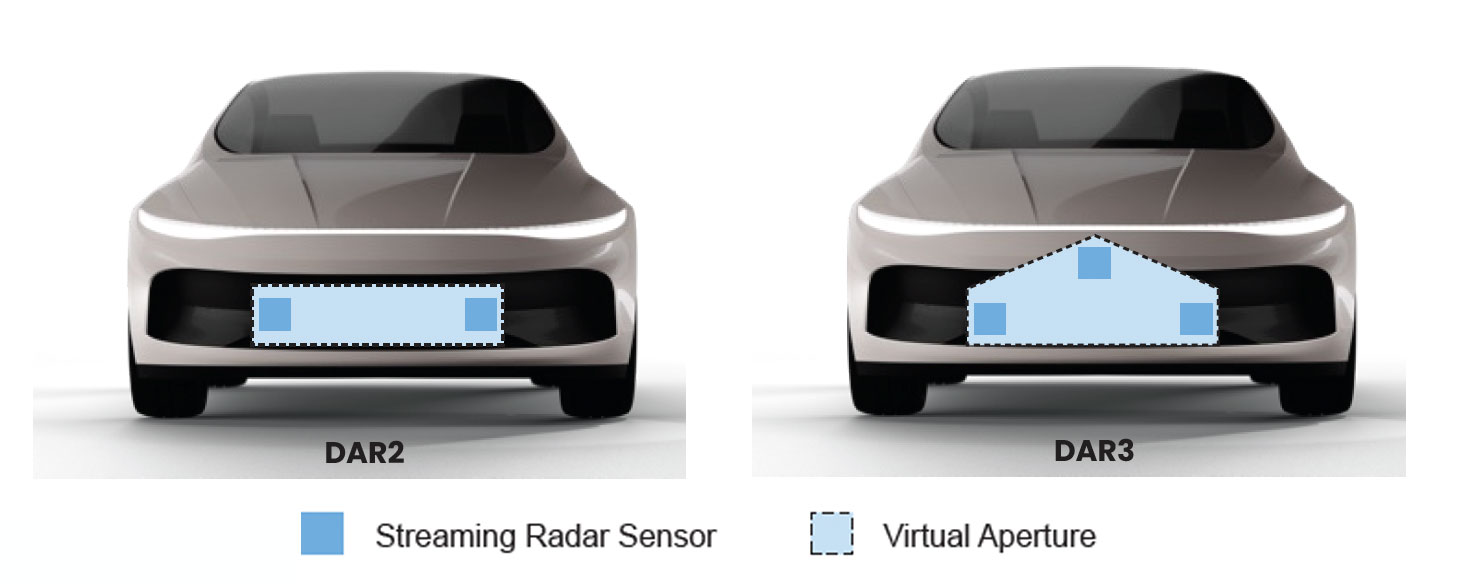

The alternative approach with distributed aperture radar is to place two or more physically displaced, forward-facing, medium-range radar sensors. By operating two sensors coherently and combining the outputs with sensor fusion techniques, a large virtual aperture is created that delivers excellent spatial resolution with an azimuth resolution of 0.5 degrees or lower. The displaced sensor approach significantly aids the detection of individual objects that are close together, allowing more precise vehicle positioning and object recognition. Using three sensors further improves performance, as illustrated.

Creating a larger field of view, virtually

Distributed aperture radar combines multiple medium-range radar sensors to create a large virtual aperture. (Source: NXP)

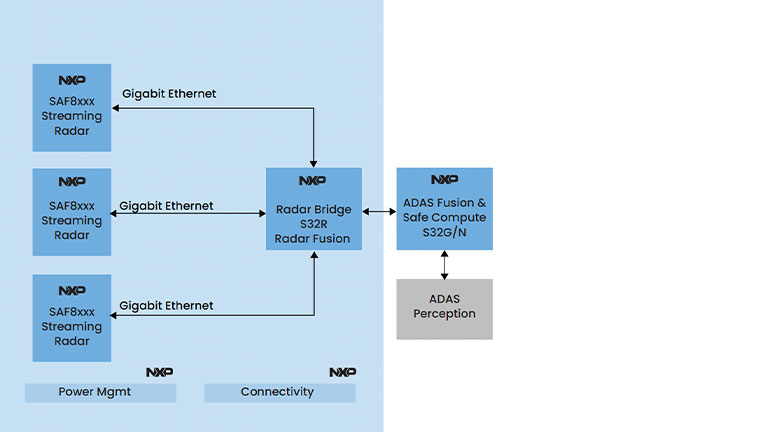

The block diagram illustrates an example of a distributed aperture radar using three NXP SAF8xx streaming radar transceivers connected to an NXP radar fusion S32R radar processing microprocessor. The S32R family provides safe and secure radar processing with a dedicated radar processing acceleration function.

Advanced processors for radar processing

The functional architecture of a distributed aperture radar subsystem using three NXP SAF8xxx radar sensors to capture data and a radar fusion processor to bring it together, before feeding the result into the ADAS ECUs. (Source: NXP)

The S32R fusion processor and the SAF8xxx transceivers integrate the Zendar DAR software stack to achieve sub-nanosecond synchronization, delivering a single image from multiple medium-range radar sensors.

Differentiating roadside objects from people

DAR offers automotive manufacturers and Tier 1 suppliers an opportunity to increase object detection and accelerate autonomous driving capabilities without requiring significantly more front-facing space. With its high-resolution performance and an increased detection range of up to 340 meters, a DAR subsystem offers the ability to fast-track ADAS development.

DAR enables more accurate object detection

Comparing the point detection accuracy of DAR versus the current solution, a single medium-range radar (Source: NXP)

One of the key benefits of DAR is the ability to separate two objects that each have a low radar cross section at range. Although this is possible over short distances with a single MRR sensor, the enhanced detection range of DAR makes it highly suitable for ADAS features such as emergency braking and adaptive cruise control.

DAR provides greater accuracy over longer distances

Distributed aperture radar’s spatial displacement enables the sensor fusion software to identify and localize distant objects, such as pedestrians. (Source: NXP)

DAR speeds up autonomous driving and ADAS innovations

As vehicle manufacturers strive to enhance ADAS functions and deliver full autonomy, the need for compact, reliable and power-efficient all-around detection has never been greater. To date, the disparate sensor technologies present integration and space challenges.

With DAR, engineers can architect all-encompassing object detection capability using a single medium-range radar sensor technology. Together with high-performance sensor fusion software, DAR will transform ADAS development to achieve full autonomy.