自律走行とL1からL5への大躍進

自動車業界は間違いなく、自律走行に関して「バラ色の未来」を過度に描いてきました。運転の危険性から解放されるだけでなく、ハンドルから手を離し、椅子でくつろぎ、ストレスから解放されると考えると、本当に魅力的です。

しかし、完全自律走行が遠い将来の話であることは事実です。業界人は、技術面や安全面での課題、そしてビジネスモデルから法律および規制まで、さまざまな障害をいくらでも挙げられるでしょう。これでは、自律走行が間近に迫っていないことにも納得できます。しかし流れはできており、メーカーはこの最終目標に向けて競い合い、実現できるか否かに関わらず、レベルアップに努めています。この難しい道のりをどのように進み、どのように障害を取り除けるか、慎重に考える必要があります。

技術的に考えると、自律走行を実現する課題は常に拡張性にありました。完全な自動走行という最終目標は、1つのステップではなく段階的に達成されるからです。したがって、本当の課題は、この長い開発プロセスを通して使用することができ、自律走行の各レベルにおけるさまざまなコンピューティングパワーおよび安全性要件に対処できる拡張可能な技術的アーキテクチャを構築することにあります。 また、このプロセスには、さまざまなユーザー市場のニーズに適応するための製品がハイエンドからローエンドまで台頭するため、この拡張可能なアーキテクチャは新しい技術を素早く組み込み、収益化できなければなりません。

自律走行のレベル

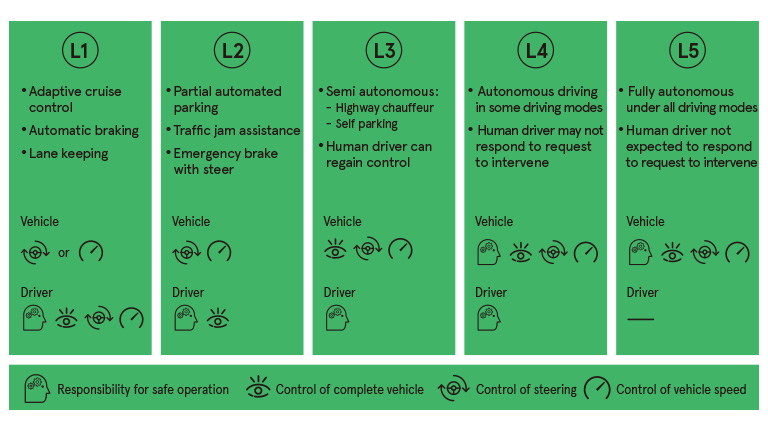

この課題の大きさを完全に把握するために、私たちはまず自律走行レベルがどのように定義されているのかを検証しなければなりません。SAE Internationalの定義によると、自律走行のレベルは、運転支援、部分的自動走行、条件的自動走行、高度自動走行、および完全自動走行という、L1からL5までの5つに分けられています。

図1 自律走行レベルの図 (画像提供:NXP)

5つのレベルの機能を見ると(図1に記載)、これは走行制御の度合いに従って定義されていることが分かります。つまり、自律走行のレベルが低いほど、ドライバーは車両に対する制御が多くなります。たとえばL1には、適応走行制御、自動ブレーキ、車線逸脱防止支援など、複数の側面があります。実質的にこれら機能を使用することにより、車両は一方向の加速または減速の自動制御を実行できますが、実際の運転行為は実行できません。ドライバーは車両に対する絶対的な制御を有しており、環境を観察して正しい判断および意思決定を下します。しかしL5において、車両は完全自律の状態にあり、ドライバーの介入は必要ありません。事実、車両の運転において、ドライバーにはほとんど「発言権」がありません。

これまでの説明で分かるように、「大躍進」はL3とL4の間で起きます。L1~L3の自律走行システムは車両の手動制御に基づくドライバー中心のシステムですが、L4およびL5では基本的に車両はロボットであり、ほぼ常に人間の介入と切り離されて独立して作動します。事実、自律走行がどのように「売り込まれ」たとしても、現時点ではADASにすぎません。L4およびL5においてのみ、本当の意味で完全自動走行の領域に入ります。

L1とL5の間の大躍進を考えると、技術アーキテクチャの拡張性にはさらに課題が増えます。

拡張可能な技術アーキテクチャ

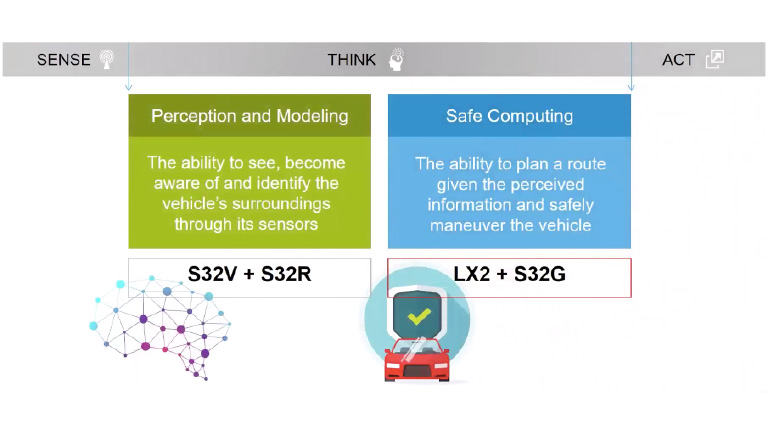

この課題の克服に着手するために、私たちはまず内容を深く理解しなければなりません。現在、業界の動向は2つの分野、つまり探知とモデリング、そして安全演算に分けることができます。

具体的に言うと、探知とモデリングは、特徴抽出、分類、およびデータの追跡を実行することを目指しています。このデータは車両センサーから取得され、対象物体、対象物体のXYZ軸の位置、対象物体の速度と角度などの情報を収集してネットワーク図を出力します。探知とモデリング分野の出力は、安全演算分野の入力として使用できます。安全演算分野で実行すべきことは、対象物体のネットワーク図と環境情報を統合し、最適なルートを計画し、数秒先の可能な変化を動的に予測することです。計算結果の出力は、車両の加速と減速および運転という2つの制御信号です。この計算プロセスが繰り返されて、首尾一貫した自動走行の動作につながります。

探知とモデリングおよび安全演算の2つの分野は機能が異なり、その具体的な技術的要件も異なる場合があります。これらの違いは、主に機能的な安全性と演算の効率性に反映されています。

探知とモデリングの場合、フロントエンドの入力はカメラのレンズ、ミリ波レーダー、およびレーザーレーダーを含めた複数のセンサーからもたらされるため、この3種類のセンサーのうち少なくとも2つは、複雑なアプリケーションのシナリオに適合するために包括的で正確なデータ捕捉の要件を満たさなければなりません。システムが全体としてASIL-Dの機能的安全性レベルを達成するためには、探知とモデリングシステムにおける多くのセンサーの1つだけがASIL-Bの機能的安全性要件を満たす必要があります。演算パワーの点では、固定小数点演算を使用することにより、検知およびモデリングデータ処理の要件の大半を満たすことができます。

しかし、安全演算の場合はまったく話が異なります。センサーフュージョンには、データの多様性や冗長性がないため、安全演算プロセッサが単独でASIL-D機能的安全性要件を満たさなければなりません。また、システムの演算の高い複雑性により、固定小数点演算と浮動小数点演算を同時に使用する必要もあります。というのも、浮動小数点演算は、主にベクトル代数および線型代数の加速度に使用されるからです。さらに、セキュリティの視点から見ると、ニューラルネットワークはバックトラックできないため役に立ちません。決定論アルゴリズムを使用しなければならないため、この演算効率の要件に合致する相応演算アーキテクチャをサポートする必要があります。

探知とモデリングおよび安全演算の2つのタスクを同時に実行する、1つの演算アーキテクチャを想像してください。これは明らかに、経済的でなく柔軟性が制限されるでしょう。たとえば、センサーの数や種類を増やす場合、プロセッサの構造全体を入れ替える必要が生じます。したがって、真に拡張性のあるアーキテクチャを設計する1つの方法は、探知とモデリング専用および安全演算専用の2つの異なるプロセッサを使用して、将来のシステムの拡張およびアップグレードを可能にすることです。これには、たとえばNXPが採用している製品の設計方法があります。

図2 自律走行に関するNXPの拡張可能な技術アーキテクチャ(画像提供:NXP)

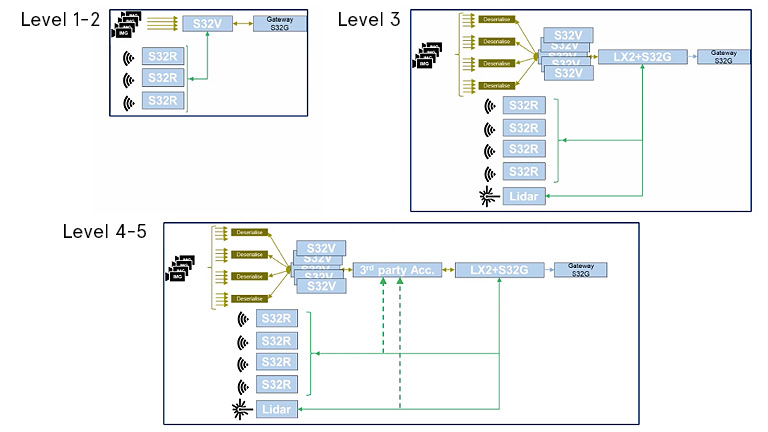

探知とモデリングに関して、NXPは視覚探知とモデリング用のS32Vおよびレーダー探知アプリケーション用のS32Rの2つのプロセッサを投入し、写真レンズとミリ波レーダーのデータを処理しています。補助プロセッサとして機能する十分なコンピューティングパワーがあり、システム全体の安全性を向上させます。この種のアーキテクチャは、L1およびL2レベルでADASアプリケーションに完全に対応できます。

L3およびL4レベルのシステムの場合、S32VおよびS32Rプロセッサは、カメラレンズおよびレーダーを必要な場合に追加できます。同時に、安全演算はこのレベルの自律走行で既に不可欠であるため、NXPはS32GおよびLayerscape LX2を使用して、このタスクを実行しています。S32Gは安全性プロセッサおよび車両周辺機インターフェース処理機器として使用されており、LX2は演算性能を向上させ、高帯域幅およびネットワーク送信機能をサポートするために使用されています。

このアーキテクチャの場合、L4およびL5レベルは、フロントエンドの探知とモデリングプロセッサおよびサードパーティのアクセラレータをシステムに追加して、システム開発の柔軟性を大幅に向上させることにより達成できます。

図3 NXPの自動走行技術アーキテクチャは柔軟で拡張可能(画像提供:NXP)

このようなアプローチを使用することにより、1つのアーキテクチャでL1からL5まですべての自律走行レベルの技術要件に対応できることは明らかであり、開発者は現在のニーズを念頭に研究開発を追及できると同時に、遠い将来に向けて技術の限界を押し広げることができます。完全自律走行の「バラ色の未来」は、遅かれ早かれ間違いなく実現するでしょう。